Appearance

Unearthing Outliers: Deep Dive into Anomaly Detection Techniques for Data Integrity

In the vast ocean of data, sometimes the most valuable insights aren't found in the average, but in the exceptions. These exceptions, often called anomalies, outliers, novelties, or deviations, are data points that don't conform to the expected pattern of behavior. Identifying them is the essence of anomaly detection, a critical task across various domains from cybersecurity to healthcare.

Why is this so important? Because these unusual patterns can be indicators of critical events:

- Fraud Detection: Uncovering suspicious financial transactions.

- Cybersecurity: Spotting network intrusions or unusual user behavior.

- Healthcare: Identifying rare diseases or adverse drug reactions.

- Manufacturing: Detecting defects in products or machinery malfunctions.

- System Monitoring: Pinpointing unusual server load or application errors.

Let's embark on a journey to explore the most effective anomaly detection techniques.

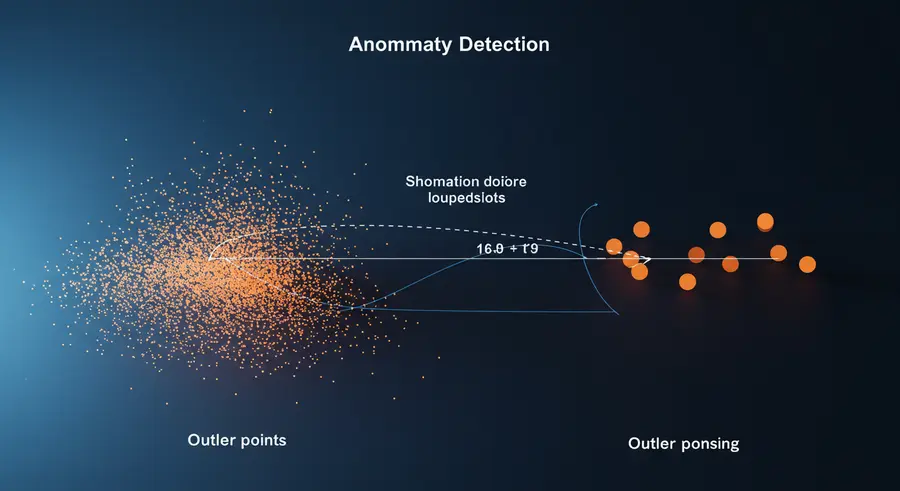

Visualizing the Concept: What is an Anomaly?

Imagine most of your data points forming a cohesive cluster. Anomalies are those points that lie far outside this cluster, standing alone.

Categories of Anomaly Detection Techniques

Anomaly detection methods can broadly be categorized based on the nature of the data and the learning approach.

1. Statistical Methods: The Foundation

Statistical anomaly detection techniques are often the first line of defense, especially when data distribution can be assumed. They work by defining a "normal" range and flagging anything outside this range as an anomaly.

A. Z-Score (or Standard Deviation Method)

This method assumes data follows a Gaussian (normal) distribution. A data point is considered an outlier if its Z-score (number of standard deviations away from the mean) exceeds a certain threshold (e.g., 2 or 3).

When to Use: Simple, effective for univariate data with a normal distribution. Limitations: Sensitive to outliers themselves (mean and standard deviation are affected), assumes normality.

python

import numpy as np

from scipy import stats

data = [2, 3, 4, 5, 6, 7, 8, 100]

z_scores = np.abs(stats.zscore(data))

threshold = 2

anomalies = np.where(z_scores > threshold)[0]

print(f"Data points: {data}")

print(f"Z-scores: {z_scores}")

print(f"Anomalies (indices): {anomalies}")

print(f"Anomalous values: {[data[i] for i in anomalies]}")B. IQR (Interquartile Range) Method

The IQR method is more robust to outliers as it doesn't rely on the mean and standard deviation. It defines outliers as data points that fall below Q1 - 1.5 * IQR or above Q3 + 1.5 * IQR, where Q1 and Q3 are the first and third quartiles, and IQR = Q3 - Q1.

When to Use: Effective for skewed distributions, less sensitive to extreme values. Limitations: May not capture subtle anomalies in complex datasets.

python

import numpy as np

data = [10, 12, 15, 16, 17, 18, 20, 22, 25, 150]

Q1 = np.percentile(data, 25)

Q3 = np.percentile(data, 75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

anomalies = [x for x in data if x < lower_bound or x > upper_bound]

print(f"Data points: {data}")

print(f"Q1: {Q1}, Q3: {Q3}, IQR: {IQR}")

print(f"Lower Bound: {lower_bound}, Upper Bound: {upper_bound}")

print(f"Anomalous values: {anomalies}")2. Machine Learning-Based Anomaly Detection Techniques

As data becomes more complex and high-dimensional, machine learning offers powerful tools to detect subtle deviations. These methods can be broadly classified into supervised, unsupervised, and semi-supervised approaches.

A. Supervised Learning for Anomaly Detection

Requires labeled data (normal vs. anomalous). This is less common as anomalies are rare and difficult to label.

Algorithms: Classification algorithms like Support Vector Machines (SVM), Random Forests, or Neural Networks can be trained to classify new data points as normal or anomalous.

When to Use: When you have a sufficiently large and balanced dataset of labeled normal and anomalous instances. Limitations: Data imbalance is a major challenge; collecting enough anomaly samples is often impractical.

B. Unsupervised Learning for Anomaly Detection

This is the most common approach, as it doesn't require pre-labeled data. It works by assuming that normal data points are more frequent and lie in a "dense" region, while anomalies are sparse and lie far from normal data.

i. Clustering-Based Methods (e.g., K-Means)

Clustering algorithms group similar data points together. Points that don't belong to any cluster or are far from cluster centroids can be considered anomalies.

How it works:

- Cluster the data.

- Calculate the distance of each point to its cluster centroid.

- Flag points with distances above a threshold as anomalies.

When to Use: Effective when normal data forms clear, compact clusters. Limitations: Performance degrades in high-dimensional data; sensitive to the number of clusters (K).

python

from sklearn.cluster import KMeans

import numpy as np

data = np.array([[1, 1], [1.5, 2], [3, 4], [5, 7], [3.5, 5], [4.5, 5], [3.5, 4.5], [10, 10], [0.5, 0.5]])

kmeans = KMeans(n_clusters=2, random_state=0, n_init='auto').fit(data)

distances = kmeans.transform(data).min(axis=1) # Distance to closest centroid

threshold = np.mean(distances) + 2 * np.std(distances)

anomalies_indices = np.where(distances > threshold)[0]

print(f"Data points:\n{data}")

print(f"Distances to closest centroid: {distances}")

print(f"Threshold for anomaly: {threshold}")

print(f"Anomalies (indices): {anomalies_indices}")

print(f"Anomalous values:\n{data[anomalies_indices]}")ii. Density-Based Methods (e.g., LOF - Local Outlier Factor)

LOF measures the local deviation of density of a given data point with respect to its neighbors. It considers a point an outlier if it is significantly less dense than its neighbors.

How it works: It compares the local density of a point to the local densities of its neighbors. An anomaly will have a much lower local density.

When to Use: Excellent for detecting anomalies in complex, non-globally distributed datasets, where densities vary. Limitations: Computationally expensive for very large datasets; sensitive to parameters like n_neighbors.

python

from sklearn.neighbors import LocalOutlierFactor

import numpy as np

data = np.array([[1, 1], [1.5, 2], [3, 4], [5, 7], [3.5, 5], [4.5, 5], [3.5, 4.5], [10, 10], [0.5, 0.5]])

lof = LocalOutlierFactor(n_neighbors=2)

# Negative_outlier_factor_ is -LOF. Lower (more negative) indicates more anomalous.

negative_lof_scores = lof.fit_predict(data)

# Anomalies are usually indicated by -1 by fit_predict

anomalies_indices = np.where(negative_lof_scores == -1)[0]

print(f"Data points:\n{data}")

print(f"LOF scores (raw output from fit_predict): {negative_lof_scores}")

print(f"Anomalies (indices): {anomalies_indices}")

print(f"Anomalous values:\n{data[anomalies_indices]}")iii. Isolation Forest

Isolation Forest is an ensemble tree-based model that "isolates" anomalies by randomly selecting a feature and then randomly selecting a split value between the maximum and minimum values of the selected feature. Anomalies are those data points that require fewer splits to be isolated.

How it works: Anomalies are few and different, making them easier to "isolate" from the rest of the data. Normal points require many splits to be isolated.

When to Use: Highly effective for high-dimensional data, scalable, and performs well even with a large number of irrelevant features. Limitations: Less effective for detecting anomalies in very dense clusters; doesn't provide anomaly scores in a probabilistic sense.

python

from sklearn.ensemble import IsolationForest

import numpy as np

data = np.array([[1, 1], [1.5, 2], [3, 4], [5, 7], [3.5, 5], [4.5, 5], [3.5, 4.5], [10, 10], [0.5, 0.5]])

model = IsolationForest(random_state=42)

model.fit(data)

# Predict scores: negative for outliers, positive for inliers

# Lower score means more anomalous

scores = model.decision_function(data)

# -1 for outliers, 1 for inliers

predictions = model.predict(data)

anomalies_indices = np.where(predictions == -1)[0]

print(f"Data points:\n{data}")

print(f"Isolation Forest scores: {scores}")

print(f"Predictions (-1 for anomaly, 1 for normal): {predictions}")

print(f"Anomalies (indices): {anomalies_indices}")

print(f"Anomalous values:\n{data[anomalies_indices]}")C. Semi-Supervised Learning for Anomaly Detection

This approach assumes that only "normal" data is available for training. The model learns the characteristics of normal behavior, and any deviation from this learned pattern is flagged as an anomaly. One-Class SVM is a popular algorithm in this category.

One-Class SVM: Learns a decision boundary that encapsulates the "normal" data points. Anything outside this boundary is an outlier.

When to Use: When you have a lot of normal data but very few or no anomaly examples. Limitations: Can be sensitive to kernel choice and hyperparameters; scales poorly with very large datasets.

3. Deep Learning-Based Anomaly Detection Techniques

Deep learning models, particularly Autoencoders and Generative Adversarial Networks (GANs), are increasingly used for anomaly detection, especially with complex, high-dimensional, or sequential data like images, video, and time series.

A. Autoencoders

Autoencoders are neural networks trained to reconstruct their input. They learn a compressed representation (encoding) of the input data and then reconstruct it.

How it works:

- Train an autoencoder on normal data. The autoencoder learns to efficiently compress and decompress normal patterns.

- When an anomalous data point is fed into the trained autoencoder, it will have a high reconstruction error because the network hasn't learned to reconstruct such patterns accurately.

- A high reconstruction error indicates an anomaly.

When to Use: Excellent for high-dimensional data (images, text), time series, and when learning a compact representation of normal data is beneficial. Limitations: Requires careful tuning; training can be time-consuming; setting the reconstruction error threshold can be tricky.

python

# Conceptual Python code for Autoencoder for Anomaly Detection

# This requires TensorFlow/Keras or PyTorch, which are not directly executable here.

# import tensorflow as tf

# from tensorflow.keras.models import Model

# from tensorflow.keras.layers import Input, Dense

# from sklearn.model_selection import train_test_split

# import numpy as np

# # 1. Generate some synthetic normal data (e.g., points around origin)

# normal_data = np.random.normal(loc=0, scale=0.5, size=(1000, 2))

# # Add some anomalies (points far from origin)

# anomaly_data = np.random.normal(loc=5, scale=1.0, size=(50, 2))

# # Combine for demonstration, but typically train on normal_data only

# full_data = np.vstack([normal_data, anomaly_data])

# # 2. Define Autoencoder Model (simple example)

# input_dim = normal_data.shape[1]

# encoding_dim = 1 # Compressed representation

# input_layer = Input(shape=(input_dim,))

# encoder = Dense(encoding_dim, activation='relu')(input_layer)

# decoder = Dense(input_dim, activation='sigmoid')(encoder) # Or 'linear' if data not normalized

# autoencoder = Model(inputs=input_layer, outputs=decoder)

# autoencoder.compile(optimizer='adam', loss='mse')

# # 3. Train on normal data only

# # autoencoder.fit(normal_data, normal_data, epochs=50, batch_size=32, shuffle=True, verbose=0)

# # 4. Predict reconstruction errors

# # reconstructed_data = autoencoder.predict(full_data)

# # mse = np.mean(np.power(full_data - reconstructed_data, 2), axis=1)

# # 5. Set a threshold for anomalies

# # threshold = np.mean(mse) + 3 * np.std(mse) # Example thresholding

# # anomalies = full_data[mse > threshold]

# # print("Conceptual Autoencoder Anomaly Detection Process:")

# # print("Normal data created, autoencoder conceptually trained on normal data.")

# # print("Reconstruction errors for all data (normal + anomaly) calculated.")

# # print("Points with high reconstruction error are flagged as anomalies.")Learn more about Autoencoders for Anomaly Detection

Time Series Anomaly Detection

Time series data, where observations are ordered in time, presents unique challenges. Anomalies can be point anomalies (single spikes), contextual anomalies (normal value in a different context), or collective anomalies (a sequence of values that is anomalous together).

Techniques:

- Statistical: ARIMA models can forecast future values; large deviations from forecasts are anomalies.

- Machine Learning: LSTMs (Long Short-Term Memory networks) are deep learning models particularly adept at learning temporal patterns. They can be used similarly to autoencoders, where high reconstruction error on a sequence indicates an anomaly.

- Change Point Detection: Algorithms that identify points in time where the statistical properties of a time series change significantly.

Challenges in Anomaly Detection

Identifying anomalous patterns is not without its hurdles:

- Defining "Normal": What constitutes normal behavior can be highly subjective and change over time.

- Data Imbalance: Anomalies are inherently rare, leading to highly skewed datasets, which can make supervised learning challenging.

- High Dimensionality: As the number of features increases, the "curse of dimensionality" can make it difficult for distance-based or density-based methods to work effectively.

- Noise: Random noise in data can be mistaken for anomalies.

- Concept Drift: The definition of "normal" can evolve, requiring models to adapt and learn new patterns.

- Lack of Labels: Most real-world anomaly detection scenarios lack labeled anomaly data.

Best Practices and Future Trends

To overcome these challenges and build robust anomaly detection systems:

- Data Preprocessing: Clean, normalize, and transform your data. Feature engineering can significantly improve model performance.

- Domain Expertise: Collaborate with domain experts to understand what constitutes a true anomaly and to help interpret results.

- Ensemble Methods: Combine multiple anomaly detection techniques to improve robustness and capture different types of anomalies.

- Explainable AI (XAI): For critical applications, understanding why a point is flagged as an anomaly is crucial. Techniques like SHAP or LIME can help.

- Real-time Detection: For streaming data, models need to process data and detect anomalies with low latency.

- Active Learning: Involve human feedback to iteratively label anomalies and improve model accuracy.

The field of anomaly detection continues to evolve rapidly, with ongoing research in areas like:

- Graph-based Anomaly Detection: For identifying anomalies in networked data.

- Adversarial Anomaly Detection: Using GANs to generate challenging negative samples for robust model training.

- Explainable Anomaly Detection: Developing models that not only detect anomalies but also provide clear reasons for their decisions.

Conclusion: Mastering the Art of Anomaly Detection

Anomaly detection techniques are indispensable tools in the arsenal of any data professional. Whether you're safeguarding systems, optimizing operations, or uncovering critical insights, the ability to effectively identify the unusual is paramount. By understanding the diverse array of methods—from foundational statistical approaches to cutting-edge deep learning—and by applying them thoughtfully, we can unbox the hidden stories in our data, fortify our systems, and turn irregularities into opportunities for deeper understanding.

Remember, data speaks; we just need to listen for the whispers of the unusual. What anomaly detection challenges are you facing? Share your thoughts and experiences in the comments below!